AI has been dominating our LinkedIn feeds for the past three years, and tools to evaluate agents have emerged just as quickly.

Now, there’s a plethora of developer-specific options when it comes to agent observability and measurement, from OpenAI Evals and LangSmith to Arize.

But one major voice has been noticeably underrepresented in this conversation: the AI product manager (PM) that’s leading development.

Evaluation and agent measurement tools should coexist, not compete

Agent evaluation tools built for developers are great at system-level diagnostics, like traces, latency, and model behavior. They're built for the technical work of debugging and fixing agents, and their primary unit of analysis is a run, trace, or eval suite.

But what if you care less about technical performance and more about how agents impact users, workflows, and business outcomes? What if you just want to know how your agent is doing a good (or bad) job, and you don’t have time to sort through hundreds of logs?

Product people need to answer questions like this. To do this, they need visibility into user conversations, funnels, and sessions within agents and the broader app experience.

When you’re just using one or the other, it’s nearly impossible to tie AI agent usage to business outcomes. But together, dev- and PM-centric measurement tools complement each other in the agentic development lifecycle. That’s why many teams (and certainly the best teams) use both.

Let’s explore four use cases that PMs can better solve with product-centric measurement.

1. Quickly see who uses your agent (and how)

Dev tools show you how many times a chain ran, but they aren’t designed to instantly tell you if your enterprise accounts are actually adopting your agent. Is usage strongest within one department? Are your super users engaging with this? Or, are you reaching an entirely new audience with this AI tool?

AI agent measurement tools, connected with the rest of your app data, lets you segment and compare conversational AI performance across roles, accounts, and workflows. This tells you which personas and use cases actually benefit from an agentic experience, and where it causes more friction or risk than its more traditional counterpart.

By treating this as part of your broader product experience, you can begin to understand the long-term impact of your agent.

2. Monitor user sentiment and risk

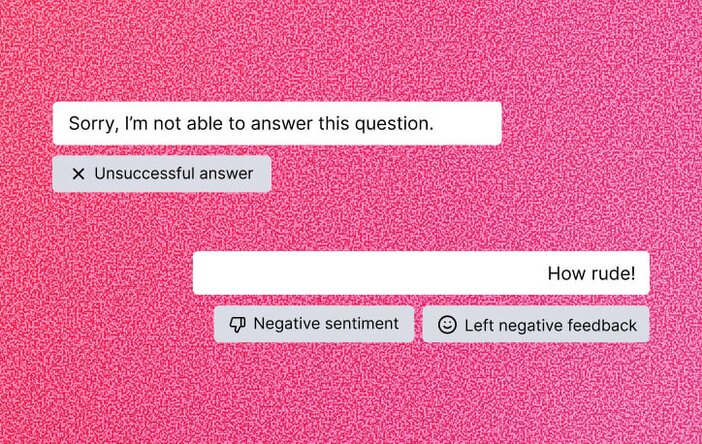

Eval suites flag when a model hallucinates, but they don't show you the frustrated user who received hat bad output, tried three more times, and then churned. Typing in all caps, asking the same question multiple times, sharing confidential files and customer data—flagging these types of conversations are essential to ensuring a successful agent.

To surface an AI agent’s failures and potential risks, PMs need automatic issue detection and sentiment monitoring based on user conversations. Then, you can:

- Filter agent issues and errors by use case, so you can see what your agent is struggling to solve (and prioritize your AI roadmap to fix this)

- Watch replays and view conversational data of the exact user and agent interaction before, during, and after moments of rage.

- Highlight non‑compliant or risky behavior while still tying back to who was affected and what they were trying to do.

Keeping a close eye on which use cases lead to unhappy users (or risky behavior) will ultimately help you protect your company’s growth and reputation.

3. Connect agent usage to business outcomes

Observability platforms track latency and token costs, but they don’t seamlessly tell you whether your agent is driving retention, speeding up workflows, or hurting satisfaction.

To help you answer this, product-centric measurement map agent usage directly to product experience and business metrics: task completion, conversion rates, user feedback, and workflow speed. This allows PMs to treat each agent like a feature or digital employee whose performance can be reviewed against actual outcomes, not just technical benchmarks.

With a PM-centric measurement tool, you can answer the important questions:

- How does agent usage correlate with conversions? Retention? Satisfaction?

- Which types of users are most engaged and successful with the agent? Least?

- How are users first discovering the agent? Which channels have driven the most adoption?

- Does your agent experience or traditional UI have a stronger correlation with satisfaction and revenue growth?

4. Gather feedback

Eval tools measure accuracy and retrieval performance, but they don’t often include ways to capture what users are thinking or feeling. Are users happy? Confused? Planning to cancel? Without some way to tie agent usage to user feedback, you can answer “what happened,” but not “why.”

PM-first agent measurement gives you a holistic view of user sentiment and satisfaction. Analyze user feedback from before and after agent usage, view it in the context of behavioral data, and watch replays of their behavior. It’s the holy grail of VOC, and paints the full picture of your agent’s impact: what users said, what they did, and how they felt.

Case study: How product people are evaluating AI agent performance

The Pendo team uses our PM-centric measurement tool, Agent Analytics, every day. One way we put it to work is by monitoring internal AI usage on popular tools like Claude and ChatGPT. Another way we’ve used it is by monitoring adoption of our AI guide creation tool, which turns plain-language prompts into in-app guides.

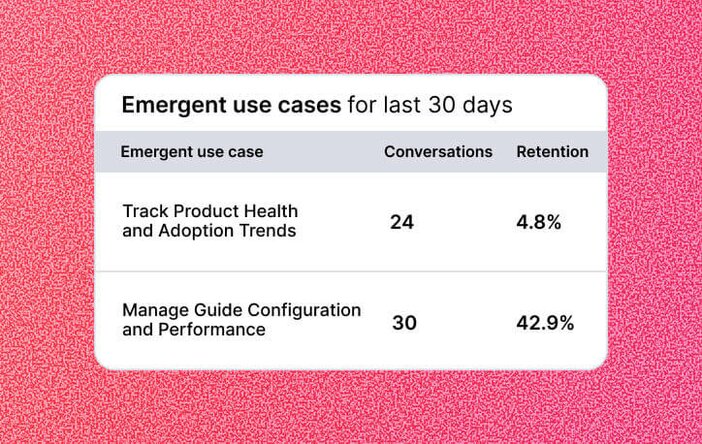

The PM behind our AI guide creation tool, Alejandro, used Agent Analytics’ reports to see who was using the feature, how frequently, and what the use cases were.

When Alejandro found that a popular use case (creating a guide with a video), wasn’t supported, he prioritized this on the roadmap, used guides to communicate what the tool is capable of, and helped level-set user expectations.

Alejandro also watched session replays of agent interactions and found dead clicks happening in the AI guide creation workflow. This gave him much-needed evidence to fight for a dev fix as soon as possible. As Dao puts it, “Agent Analytics doesn’t replace good product fundamentals. But it does act as an accelerator or magnifier of your own skills.”

The first AI agent measurement tool built for PMs

Developers use tracing and eval tools to diagnose why a chain failed. PMs use product-centric measurement tools to identify which use cases are struggling, then hand off specific scenarios to engineering for deep technical diagnosis.

Both roles need specialized measurement—and use tools designed for each. Take a self-guided tour of Agent Analytics in action to start driving adoption, reducing friction, and proving your AI ROI.

![[object Object]](https://cdn.builder.io/api/v1/image/assets%2F6a96e08774184353b3aa88032e406411%2F74966106286547e1937f3ff9fb35f2ac?format=webp)

![[object Object]](https://cdn.builder.io/api/v1/image/assets%2F6a96e08774184353b3aa88032e406411%2F16b6f2565d1d4a5585db322386f9be7f?format=webp)

![[object Object]](https://cdn.builder.io/api/v1/image/assets%2F6a96e08774184353b3aa88032e406411%2F728d67faa5f64299b8f8f0b138881fb5?format=webp)