For too long, product teams have struggled with using the right metrics to understand product health. Not only are there too many metrics to select from, but many product metrics—like page views or downloads—are not action-oriented. So, we created the Product Engagement Score (PES) to give product leaders that single, actionable metric to describe how their product is performing. More specifically, a metric that is easy to generate, understand, and improve upon.

With that vision in mind, we designed PES as a composite score made up of three individual metrics directly tied to user engagement: adoption, stickiness, and growth. Since its launch in January 2021, we’ve added features based on that original vision and valuable customer feedback we’ve collected along the way. These include an Account filter, a historical trending PES widget, and most notably, PES Drilldowns.

But while PES has seen reasonably high adoption and interest from our customers, we also heard concerns, including questions that made us reevaluate how the score itself is calculated. And since we are product managers, we got curious. This curiosity led to further investigation, and then to three key insights:

-

- PES doesn’t always provide an accurate depiction of product engagement

- PES isn’t always easy to understand

- PES is often extremely volatile, making it hard to improve upon

Yes, you read that right. We got it wrong the first time around. Well, let me rephrase: We shipped something brand new and of value, we listened to our customers, and now we’re taking action to make it even better.

Understanding what needed to change

The first inkling that something was wrong came by way of feedback from users who struggled to understand and use the PES adoption score in an actionable way. Users reported that the two configuration options of “ANY” or “ALL” Core Event usage were either too broad or too specific. For example, very few customers we talked to could reasonably expect their entire user base to use all of their defined Core Events. Yet any Core Event usage, though it captured a picture of adoption, wasn’t particularly helpful either.

The next clue came from users (especially those in customer success) who wanted to see PES at the account level to better understand account health based on actual product engagement. This use case became even more prominent after we teamed up with our data science team to understand how PES can be used as a predictor of churn and retention. In this scenario, the original adoption calculation often gave either a binary 0 or 100 score because of the limited configuration options. Again, not a particularly accurate depiction of adoption.

The final piece of the puzzle was the large quantity of feedback we heard around the growth pillar. From blanket statements like, “the score is so volatile” and “growth varies drastically day to day” to confusion around why we use a projected annual growth rate calculation, the growth score wasn’t providing much value. As we started to dig into how the data and calculation worked together, we realized that the growth calculation penalized you for simply maintaining your user base. But as any product manager knows, user retention is important and needs to be rewarded.

What’s not changing in PES

For the diligent readers and users of PES, you’ll notice I’ve left something out: stickiness. Before I dive into how we approached fixing the problems detailed above, I want to briefly explain what’s not changing and why. Throughout the many months that have passed since launch, we have heard very little from our PES users about stickiness. Since it’s based on an industry-standard calculation that’s been tracked for years, we believe product teams simply “get it.” But in the spirit of this post, we’d love to hear if we’re wrong!

Finding the way to a better PES

Once we deeply understood the problem space, we embarked on the fun part: solutioning. I partnered with our engineering and data science teams to brainstorm other possible calculations that could get at the same spirit of the two pillars in question: adoption and growth. Together, we researched industry best practices and dug into what would be most useful to our users given the data we have in Pendo.

Adoption: Making it more actionable

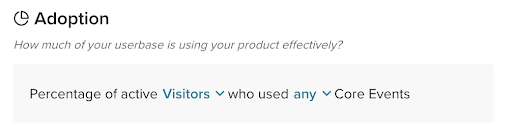

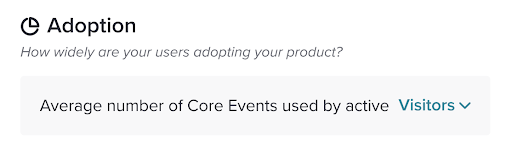

During our initial brainstorm for a new adoption formula, we returned to the primary definition of adoption: a measure of key feature usage. Guided by this principle, we decided to modify adoption from “Percentage of active Visitors/Accounts who use ANY/ALL Core Events” to a simple average: “Average number of Core Events adopted by all active Visitors/Accounts”.

In addition to changing from a percentage to an average, we also decided to remove the ANY/ALL configuration option to eliminate some of the complexity and better serve use cases requiring account-level PES data. Together, these two modifications better represent average feature usage.

Prior adoption calculation:

New adoption calculation:

Once we had the new formula, we calculated the new adoption score for about 10% of our customers to get an understanding of how this change would affect scores overall. This resulted in most adoption scores going down—but don’t fret! Being able to see and drill into the average adoption of your key features is just as—if not more—valuable than the previous calculation. The new adoption calculation allows you to ask meaningful questions that influence key feature usage, for example:

-

- How much of my core product is being used/adopted?

- Which Core Event needs my attention?

- Is my average adoption going up or down over time? If it’s going down, what can I do to drive adoption of the Core Events pulling down my average?

Growth: Making it more stable

In our search for a better growth calculation, one of the main guiding principles was to pick a calculation that resulted in a more stable final metric. We decided to base our new calculation on another metric that’s been in and around the industry: the Quick Ratio.

Although it may be more up-and-coming than industry-standard as of yet, Quick Ratio has its roots in the corporate financing and accounting industries. In the tech world, it’s often used by venture capital firms to get a feel for how a company is growing. Quick Ratio is a shorthand way to combine growth, retention, and churn into one number that describes how efficiently your product is growing. You can think of it as a way to measure the “heartbeat” of your product as it shows how many users are joining, staying, and leaving in a given period.

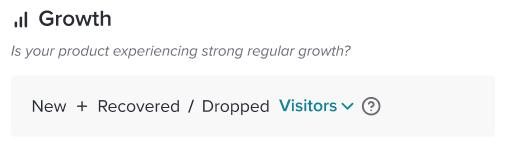

For the purposes of PES, we’ve slightly altered the typical Quick Ratio definition to better fit our platform. The new growth calculation equals the total number of new users, plus recovered users, divided by the number of dropped users.

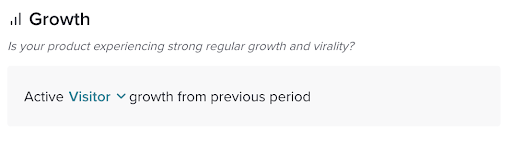

Prior growth calculation:

New growth calculation:

Once we had the formula in hand, we had to figure out how to fit a ratio spanning from 0 to infinity into the overall PES calculation. With the help of our awesome data science team, we figured out a way to take the Quick Ratio value and use a simple scale factor to fit it within the PES triangle from 0 to 100. Last but not least, we checked to make sure that the new growth scores are more stable, and indeed they are. In fact, when growth is configured to visitors, volatility improves significantly by 54%, and when configured to accounts, volatility improves by 15%.

A better PES to guide product teams

Put simply, the new and improved Product Engagement Score better describes how your product is performing. It’s simpler to understand, more stable, and easier to improve upon.

The new calculations will apply to your current and historical data, so you can continue to track PES over time. Use your favorite PES features like PES Drilldowns, the trending PES chart, and all the filters to explore the improved PES. Ready to dive in? Check out your new and improved Product Engagement Score in the product today.